可以做采集,也可以做查询网站是否被收录的爬虫。用途广泛,实际上selenium 是用来做前端自动化测试的。

from selenium import webdriver

from selenium.webdriver.support import expected_conditions

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.common.by import By

from bs4 import BeautifulSoup

import requests, re, time

def getChrome():

options = webdriver.ChromeOptions()

options.add_argument('--headless') # 无头

# options.add_argument('--disable-gpu') # 避免bug

# options.add_argument("--no-sandbox") # root用户也可以启动,谷歌浏览器默认root用户不能启动

# 设置代理

# options.add_argument("--proxy-server=http://"+proxy_ip())

# 一定要注意,=两边不能有空格,不能是这样--proxy-server = http://202.20.16.82:10152

options.add_argument(

'user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) ' +

'Chrome/81.0.4044.129 Safari/537.36')

# [一行js代码识别Selenium+Webdriver及其应对方案](https://www.cnblogs.com/xieqiankun/p/hide-webdriver.html)

options.add_experimental_option('excludeSwitches', ['enable-automation'])

chrome_driver = r"D:\Develop\webdriver\chromedriver.exe"

browser = webdriver.Chrome(executable_path=chrome_driver, options=options)

return browser

def baidu():

keyword = 'python'

driver = getChrome()

driver.set_page_load_timeout(15)

driver.set_window_size(2126, 1228)

driver.get("https://www.baidu.com/")

driver.find_element(By.ID, "kw").click()

driver.find_element(By.ID, "kw").send_keys(keyword)

driver.implicitly_wait(5) # 智能等待

driver.find_element(By.ID, "su").click()

try:

# 最多等待10秒直到浏览器标题栏中出现我希望的字样(比如查询关键字出现在浏览器的title中)

WebDriverWait(driver, 10).until(expected_conditions.title_contains(keyword))

print(driver.title)

bsobj = BeautifulSoup(driver.page_source, features="html.parser")

num_text_element = bsobj.find('span', {'class': 'nums_text'})

print(num_text_element.text)

nums = filter(lambda s: s == ',' or s.isdigit(), num_text_element.text)

print(''.join(nums))

elements = bsobj.findAll('div', {'class': re.compile('c-container')})

for element in elements:

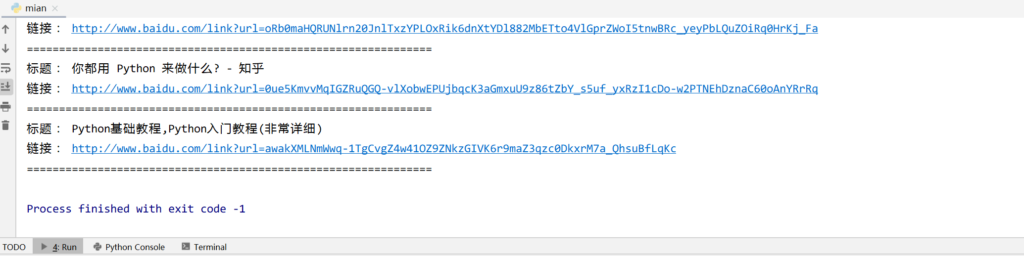

print('标题:', element.h3.a.text)

print('链接:', element.h3.a['href'])

print('===============================================================')

finally:

driver.close()

# 退出,清除浏览器缓存

driver.quit()

if __name__ == "__main__":

while True:

baidu()